This page gives a quick overview of the App SDK and introduces you to the general topic of photorealistic rendering.

I. Introduction

The V-Ray Application SDK allows third-party integrators to initiate and control a rendering process that makes use of the V-Ray engine. It provides a high-level API that enables users to render and manipulate a V-Ray scene inside the host process or outside it using distributed rendering. The scene may be created in memory by translating the native format to V-Ray plugins or it could be loaded from a file, potentially exported from another application.

II. Setup

In order to use V-Ray Application SDK, the VRAY_SDK environment variable should be set. To use the Python binding, the python folder must be in the Python module load paths (e.g. by adding it to PYTHONPATH).

The setenv script will set both environment variables. To use it run from the corresponding command line tool:

- setenv.bat (Windows)

- source setenv.sh (Linux/macOS)

When VRAY_SDK is set, the App SDK binding will search for the VRaySDKLibrary binary and its dependencies inside VRAY_SDK/bin. If the directory structure of a product that uses App SDK doesn’t include the binaries inside a /bin folder, VRAY_APPSDK_BIN can be used instead of VRAY_SDK. In that case, the App SDK binding will try to load the binaries directly from the folder that VRAY_APPSDK_BIN points at.

In the case that no environment variables could be used, the App SDK API allows setting the search path at runtime. Please read the API documentation for the corresponding binding.

III. Package Contents

- bin - V-Ray core binaries. Includes the V-Ray Application SDK library

- cpp - C++ header files

- dotnet - .NET Framework 4.0 (Mono) V-Ray Application SDK DLLs

- dotnetcore - .NET Core 3.1 V-Ray Application SDK DLLs

- examples - Code samples for all supported languages

- help - SDK documentation and API guide for offline use

- node - Node.JS and Electron V-Ray Application SDK module/binding

- python - Python V-Ray Application SDK module

- scenes - Sample scenes made by Chaos

- tools - V-Ray tools for viewing/converting assets etc.

- setenv.bat/sh - script that sets up a local environment for running the V-Ray Application SDK. To use it, invoke a script in a command window (in bash use "source setenv.sh"). It will update the environment variables needed by the SDK.

IV. Basic Rendering

The V-Ray Application SDK exposes a VRayRenderer class that initiates and controls any rendering process. The class defines high-level methods for creating or loading a V-Ray scene and rendering it. It hides the inner complexities of the V-Ray engine while keeping its powerful capabilities.

The basic workflow for starting a render process usually consists of the following steps:

- Instantiating the configurable VRayRenderer class

- Either of (or even a combination of):

- Creating new instances of V-Ray render plugins to translate your native scene description to V-Ray and setting their parameters

- Loading a scene by specifying a path to a .vrscene file (the V-Ray native format for serializing scenes)

- Invoking the method for rendering

- Waiting for the image to become available

- Cleaning up memory resources by closing the VRayRenderer

All language implementations of the VRayRenderer class offer a method without arguments (start()) that is used to start the rendering of the currently loaded scene. The method is a non-blocking call which internally runs the V-Ray engine in a separate thread. Thus the rendering can conveniently take place as a background process without the need to use language-specific tools for creating threads.

The VRayRenderer class gives access to the current state of the rendered image at any point in time. Whether the render process has finished or not, an image can be extracted to track the progress of the rendering. All language bindings expose a method that returns an image object which holds the rendered image data at the time of the request. This image class can be used for direct display or to save the rendered image to a file in one of several supported popular compression formats.

import vray

with vray.VRayRenderer() as renderer:

renderer.load('intro.vrscene')

renderer.start()

renderer.waitForRenderEnd(6000)

image = renderer.getImage()

image.save('intro.png')

#include "vraysdk.hpp"

using namespace VRay;

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.load("intro.vrscene");

renderer.start();

renderer.waitForRenderEnd(6000);

LocalVRayImage image(renderer.getImage());

image->saveToPng("intro.png");

return 0;

}

using VRay;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("intro.vrscene");

renderer.Start();

renderer.WaitForRenderEnd(6000);

VRayImage image = renderer.GetImage();

image.SaveToPNG("intro.png");

}

var vray = require('vray');

var renderer = vray.VRayRenderer();

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function() {

var image = renderer.getImage();

image.save('intro.png', function() {

renderer.close();

});

});

});

});

V. Render Modes

The V-Ray Application SDK offers the ability to run the V-Ray engine in two distinct render modes - Production and Interactive.

Production mode is suitable for obtaining the final desired image after the scene has been carefully configured as the render process can take a lot of time before the whole image is available.

Interactive mode on the other hand is very useful for fast image preview and progressive image quality improvement which makes it the perfect choice for experimenting with scene settings and modifying content and receiving fast render feedback.

Each render mode is supported in two flavors - CPU and GPU. The former utilizes the CPU resources while the latter takes advantage of the graphics card computing power. The GPU rendering type is in turn subdivided into two modes - CUDA and Optix (both NVIDIA only) depending on the technology that is used with the GPU.

The type of rendering that will be initiated can be chosen easily through the renderMode property of the VRayRenderer object. The default render mode for the VRayRenderer class is Interactive (CPU). The render mode can be changed between renders to avoid having to re-create or re-load the scene. This allows you to work interactively on a scene with V-Ray and then to switch to production mode for final rendering without any overhead.

with vray.VRayRenderer() as renderer:

renderer.renderMode = 'production'

renderer.load('intro.vrscene')

renderer.start()

renderer.waitForRenderEnd(6000)

image = renderer.getImage()

image.save('intro.png')

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setRenderMode(VRayRenderer::RenderMode::RENDER_MODE_PRODUCTION);

renderer.load("intro.vrscene");

renderer.start();

renderer.waitForRenderEnd(6000);

LocalVRayImage image(renderer.getImage());

image->saveToPng("intro.png");

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.RenderMode = RenderMode.PRODUCTION;

renderer.Load("intro.vrscene");

renderer.Start();

renderer.WaitForRenderEnd(6000);

VRayImage image = renderer.GetImage();

image.SaveToPNG("intro.png");

}

var renderer = vray.VRayRenderer();

renderer.renderMode = 'production';

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function() {

var image = renderer.getImage();

image.save('intro.png', function() {

renderer.close();

});

});

});

});

VI. Common Events

During the rendering process that runs in the background in a separate thread V-Ray emits a number of events that are available to App SDK users. You can subscribe to these events through the main VRayRenderer class.

The V-Ray Application SDK provides the means for attaching callbacks that execute custom client logic when an event occurs. The code of the callbacks is executed in a separate thread that is different from the main V-Ray rendering thread. This design allows the V-Ray rendering process to remain fast without additional overhead and ensures that slow operations in client callback code will not affect the total rendering time. Keep in mind that slow user callbacks may delay the execution of other callbacks. Each callback includes a time 'instant' argument that gives you the exact time the event ocurred. The callback itself may be executed at a noticeably different moment due to queueing and being asynchronous.

The events can be broadly classified into three types: events common for all render modes, events specific to bucket rendering and events specific to progressive rendering. This section covers the events that are emitted regardless of the type of sampling.

The common render events occur when:

- The renderer state changes (e.g. starts rendering, stops rendering)

- V-Ray outputs text messages prior to and during rendering

- V-Ray switches the current task (e.g. loading, rendering) and reports progress percentage

State Changed Event

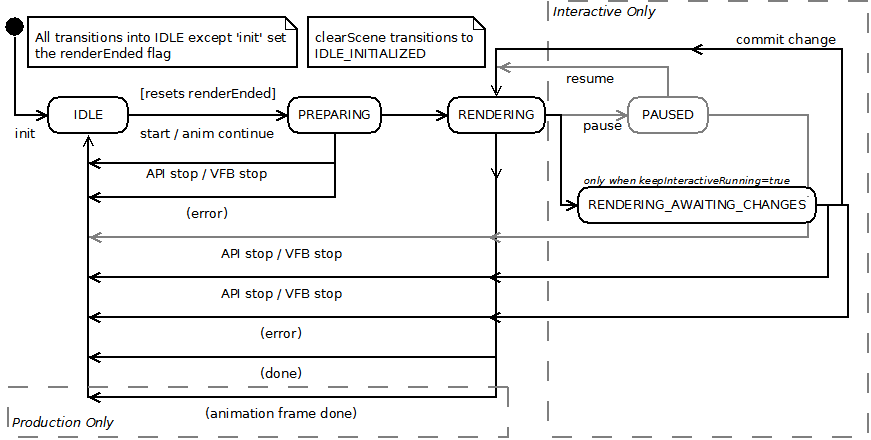

The state change event is emitted every time the renderer transitions from one state to another, such as when starting to render, when the image is done or when an error occurs and it stopped. This is the main point to implement user logic related to initiating and completing renders.

Unless startSync() is used instead of the asynchronous start() method, no changes should be made to the scene and renderer while in the PREPARING state before transitioning out of it. Attempting to make changes will be rejected.

The final image is available when the renderer gets to the IDLE_DONE state. Intermediate images are also available during rendering.

def onStateChanged(renderer, oldState, newState, instant):

print('State changed from', oldState, 'to', newState)

with vray.VRayRenderer() as renderer:

renderer.setOnStateChanged(onStateChanged)

renderer.load('intro.vrscene')

renderer.start()

renderer.waitForRenderEnd(6000)

void onStateChanged(VRayRenderer& renderer, RendererState oldState, RendererState newState, double instant, void* userData) {

printf("State changed from %d to %d\n", oldState, newState);

}

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setOnStateChanged(onStateChanged);

renderer.load("intro.vrscene");

renderer.start();

renderer.waitForRenderEnd(6000);

return 0;

}

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.StateChanged += new EventHandler<StateChangedEventArgs>((source, e) =>

{

Console.WriteLine("State changed from {0} to {1}", e.OldState, e.NewState);

});

renderer.Load("intro.vrscene");

renderer.Start();

renderer.WaitForRenderEnd(6000);

}

var renderer = vray.VRayRenderer();

renderer.on('stateChanged', function(oldState, newState, instant) {

console.log('State changed from ' + oldState + ' to ' + newState);

});

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function() {

renderer.close();

});

});

});

Log Message Event

Output messages are produced by the V-Ray engine during scene loading and rendering and they can be captured by subscribing to the message log event. The callback data that becomes available when a message is logged is the text of the message and the log level type (info, warning or error).

def onLogMessage(renderer, message, level, instant):

if level == vray.LOGLEVEL_ERROR:

print("[ERROR]", message)

elif level == vray.LOGLEVEL_WARNING:

print("[Warning]", message)

elif level == vray.LOGLEVEL_INFO:

print("[info]", message)

# Uncomment for testing, but you might want to ignore these in real code

#else: print("[debug]", message)

with vray.VRayRenderer() as renderer:

renderer.setOnLogMessage(onLogMessage)

# ...

void onLogMessage(VRayRenderer &renderer, const char* message, MessageLevel level, double instant, void* userData) {

switch (level) {

case MessageError:

printf("[ERROR] %s\n", message);

break;

case MessageWarning:

printf("[Warning] %s\n", message);

break;

case MessageInfo:

printf("[info] %s\n", message);

break;

case MessageDebug:

// Uncomment for testing, but you might want to ignore these in real code

//printf("[debug] %s\n", message);

break;

}

}

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setOnLogMessage(onLogMessage);

// ...

return 0;

}

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.LogMessage += new EventHandler<MessageEventArgs>((source, e) =>

{

// You can remove the if for testing, but you might want to ignore Debug in real code

if (e.LogLevel != LogLevelType.Debug)

{

Console.WriteLine(String.Format("[{0}] {1}", e.LogLevel.ToString(), e.Message));

}

});

// ...

}

var renderer = vray.VRayRenderer();

renderer.on('logMessage', function(message, level, instant) {

if (level == vray.LOGLEVEL_ERROR)

console.log("[ERROR] ", message);

else if (level == vray.LOGLEVEL_WARNING)

console.log("[Warning] ", message);

else if (level == vray.LOGLEVEL_INFO)

console.log("[info] ", message);

// Uncomment for testing, but you might want to ignore these in real code

//else console.log("[debug] ", message);

});

// ...

Progress Event

Progress events are emitted whenever the current task changes and also when the amount of work done increases. They include a short message with the current task name and two numbers for the total amount of work to do and the work that is already complete.

Here are short examples how to report progress in percentage:

def printProgress(renderer, msg, progress, total, instant):

print('Progress: {0} {1}%'.format(msg, round(100.0 * progress / total, 2)))

with vray.VRayRenderer() as renderer:

renderer.setOnProgress(printProgress)

# render ...

void printProgress(VRay::VRayRenderer &renderer, const char* message, int progress, int total, double instant, void* userData) {

printf("Progress: %s %.2f%%\n", message, 100.0 * progress / total);

}

int main() {

// ...

renderer.setOnProgress(printProgress);

// render ...

}

renderer.Progress += new EventHandler<ProgressEventArgs>((source, e) =>

{

Console.WriteLine(String.Format("Progress: {0} {1}%", e.Message, 100.0 * e.Progress / e.Total));

});

// render ...

var printProgress = function(message, progress, total, instant) {

console.log('Progress: ' + message + (100.0 * progress / total).toFixed(2) + '%');

});

renderer.on('progress', printProgress);

// render ...

VII. Bucket Events

There are two types of image sampling in Production mode - "bucket" sampling and progressive sampling. Bucket rendering splits the image into small rectangular sub-images, each processed independently by a different local CPU thread or network server (see the Distributed Rendering section). The sub-images are only returned when completely ready. Progressive rendering works the same way as in Interactive mode - the whole image is sampled and images are returned for each sampling pass, reducing noise with each pass. This section covers the events which are specific to Production bucket rendering only. The progressive sampling mode emits the event described in the Progressive Events section further below.

There are two phases in Production bucket rendering - assigning a bucket to a render host (or local thread) and rendering the assigned region of the image. This is why there are two events that are raised for each bucket of the image - initializing a bucket and receiving the image result. Users of the API can subscribe to the events via the main VRayRenderer class.

In Production bucket mode the image is perceived as a grid of rectangular regions, "buckets". The bucket is uniquely identified by the coordinates of its top left corner and the width and height of its rectangular region. The top left corner of the whole image has coordinates (0, 0).

To enable bucket sampling, the VRayRenderer’s render mode must be production and SettingsImageSampler::type=1.

Bucket Init Event

The bucket init event is raised when the main V-Ray thread assigns a bucket to a network render host or local thread. The callback data that is provided for this event is the bucket size and coordinates as well as the name of the render host (if any) as it appears on the network.

def onBucketInit(renderer, bucket, passType, instant):

print('Starting bucket:')

print('\t x: ', bucket.x)

print('\t y: ', bucket.y)

print('\t width: ', bucket.width)

print('\t height:', bucket.height)

print('\t host:', bucket.host)

with vray.VRayRenderer() as renderer:

renderer.renderMode = 'production'

renderer.setOnBucketInit(onBucketInit)

renderer.load('intro.vrscene')

# Set the sampler type to adaptive(buckets).

sis = renderer.classes.SettingsImageSampler.getInstanceOrCreate()

sis.type = 1

# Disable light cache so we get buckets straight away.

sgi = renderer.classes.SettingsGI.getInstanceOrCreate()

sgi.secondary_engine = 2

renderer.start()

renderer.waitForRenderEnd()

using namespace VRay;

using namespace VRay::Plugins;

void onBucketInit(VRayRenderer& renderer, int x, int y, int width, int height

, const char* host, ImagePassType pass, double instant, void* userData) {

printf("Starting bucket:\n");

printf("\t x: %d\n", x);

printf("\t y: %d\n", y);

printf("\t width: %d\n", width);

printf("\t height: %d\n", height);

printf("\t host: %s\n", host);

}

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setRenderMode(VRayRenderer::RenderMode::RENDER_MODE_PRODUCTION);

renderer.setOnBucketInit(onBucketInit);

renderer.load("intro.vrscene");

// Set the sampler type to adaptive (buckets).

SettingsImageSampler sis = renderer.getInstanceOrCreate<SettingsImageSampler>();

sis.set_type(1);

// Stop LC calculation, so we get buckets immediately.

SettingsGI sgi = renderer.getInstanceOrCreate<SettingsGI>();

sgi.set_secondary_engine(2);

renderer.startSync();

renderer.waitForRenderEnd();

return 0;

}

using VRay;

using VRay.Plugins;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.RenderMode = RenderMode.PRODUCTION;

renderer.BucketInit += new EventHandler<BucketRegionEventArgs>((source, e) =>

{

Console.WriteLine("Starting bucket:");

Console.WriteLine("\t x:" + e.X);

Console.WriteLine("\t y:" + e.Y);

Console.WriteLine("\t width:" + e.Width);

Console.WriteLine("\t height:" + e.Height);

Console.WriteLine("\t host:" + e.Host);

});

renderer.Load("intro.vrscene");

// Set the sampler type to adaptive (buckets).

SettingsImageSampler sis = renderer.GetInstanceOrCreate<SettingsImageSampler>();

sis.SettingsImageSamplerType = 1;

// Stop LC calculation, so we get buckets immediately.

SettingsGI sgi = renderer.GetInstanceOrCreate<SettingsGI>();

sgi.SecondaryEngine = 2;

renderer.Start();

renderer.WaitForRenderEnd();

}

var renderer = vray.VRayRenderer();

renderer.renderMode = 'production';

renderer.on('bucketInit', function(region, passType, instant) {

console.log('Starting bucket:');

console.log('\t x:' + region.x);

console.log('\t y:' + region.y);

console.log('\t width:' + region.width);

console.log('\t height:' + region.height);

console.log('\t host:' + region.host);

});

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

// Set the sampler type to adaptive(buckets).

var sis = renderer.classes.SettingsImageSampler.getInstanceOrCreate();

sis.type = 1;

// Disable light cache so we get buckets straight away.

var sgi = renderer.classes.SettingsGI.getInstanceOrCreate();

sgi.secondary_engine = 2;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(function() {

renderer.close();

});

});

});

Bucket Ready Event

The bucket ready event is raised when the render host that has been assigned a bucket has finished rendering the part of the image and has sent its result to the main V-Ray thread. The returned callback data for the event contains the size and coordinates of the region, the render host name and the produced image.

def onBucketReady(renderer, bucket, passType, instant):

print('Bucket ready:')

print('\t x: ', bucket.x)

print('\t y: ', bucket.y)

print('\t width: ', bucket.width)

print('\t height:', bucket.height)

print('\t host:', bucket.host)

fileName = 'intro-{0}-{1}.png'.format(bucket.x, bucket.y)

bucket.save(fileName)

with vray.VRayRenderer() as renderer:

renderer.renderMode = 'production'

renderer.setOnBucketReady(onBucketReady)

renderer.load('intro.vrscene')

# Set the sampler type to adaptive(buckets).

sis = renderer.classes.SettingsImageSampler.getInstanceOrCreate()

sis.type = 1

# Disable light cache so we get buckets straight away.

sgi = renderer.classes.SettingsGI.getInstanceOrCreate()

sgi.secondary_engine = 2

renderer.start()

renderer.waitForRenderEnd()

using namespace VRay;

using namespace VRay::Plugins;

void onBucketReadyCallback(VRayRenderer& renderer, int x, int y, const char* host

, VRayImage* image, ImagePassType pass, double instant, void* userData) {

printf("Bucket ready:\n");

printf("\t x: %d\n", x);

printf("\t y: %d\n", y);

printf("\t width: %d\n", image->getWidth());

printf("\t height: %d\n", image->getHeight());

printf("\t host: %s\n", host);

char fileName[64];

sprintf(fileName, "intro-%d-%d.png", x, y);

image->saveToPng(fileName);

}

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setRenderMode(VRayRenderer::RenderMode::RENDER_MODE_PRODUCTION);

renderer.setOnBucketReady(onBucketReadyCallback);

renderer.load("intro.vrscene");

// Set the sampler type to adaptive (buckets).

SettingsImageSampler sis = renderer.getInstanceOrCreate<SettingsImageSampler>();

sis.set_type(1);

// Stop LC calculation, so we get buckets immediately.

SettingsGI sgi = renderer.getInstanceOrCreate<SettingsGI>();

sgi.set_secondary_engine(2);

renderer.startSync();

renderer.waitForRenderEnd();

return 0;

}

using VRay;

using VRay.Plugins;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.RenderMode = RenderMode.PRODUCTION;

renderer.BucketReady += new EventHandler<BucketImageEventArgs>((source, e) =>

{

Console.WriteLine("Bucket ready:");

Console.WriteLine("\t x:" + e.X);

Console.WriteLine("\t y:" + e.Y);

Console.WriteLine("\t width:" + e.Width);

Console.WriteLine("\t height:" + e.Height);

Console.WriteLine("\t host:" + e.Host);

VRayImage image = e.Image;

image.SaveToPNG(string.Format("intro-{0}-{1}.png", e.X, e.Y));

image.Dispose();

});

renderer.Load("intro.vrscene");

// Set the sampler type to adaptive (buckets).

SettingsImageSampler sis = renderer.GetInstanceOrCreate<SettingsImageSampler>();

sis.SettingsImageSamplerType = 1;

// Stop LC calculation, so we get buckets immediately.

SettingsGI sgi = renderer.GetInstanceOrCreate<SettingsGI>();

sgi.SecondaryEngine = 2;

renderer.Start();

renderer.WaitForRenderEnd();

}

var renderer = vray.VRayRenderer();

renderer.renderMode = 'production';

renderer.on('bucketReady', function(bucket, passType, instant) {

console.log('Bucket ready:');

console.log('\t x:' + bucket.x);

console.log('\t y:' + bucket.y);

console.log('\t width:' + bucket.width);

console.log('\t height:' + bucket.height);

console.log('\t host:' + bucket.host);

var fileName = 'intro-' + bucket.x + '-' + bucket.y + '.png'

bucket.save(fileName, function() {

bucket.close();

});

});

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

// Set the sampler type to adaptive(buckets).

var sis = renderer.classes.SettingsImageSampler.getInstanceOrCreate();

sis.type = 1;

// Disable light cache so we get buckets straight away.

var sgi = renderer.classes.SettingsGI.getInstanceOrCreate();

sgi.secondary_engine = 2;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(function() {

renderer.close();

});

});

});

VIII. Progressive Events

When rendering in Interactive mode or in Production with the image sampler set for progressive sampling (instead of buckets), the whole image is sampled at once and the progressiveImageUpdated event is emitted for each sampling pass with a more refined image. The purpose of Interactive mode is to allow users to receive fast feedback for the scene they have configured. Noisy images are quickly available when the Interactive render process starts.

counter = 0

def onImageUpdated(renderer, image, index, passType, instant):

global counter

counter += 1

fileName = 'intro-{0}.jpeg'.format(counter)

image.save(fileName)

with vray.VRayRenderer() as renderer:

renderer.setOnProgressiveImageUpdated(onImageUpdated)

renderer.load('intro.vrscene')

renderer.start()

renderer.waitForRenderEnd()

int counter = 0;

void onImageUpdated(VRayRenderer& renderer, VRayImage* image

, unsigned long long index, ImagePassType passType, double instant, void* userData) {

char fileName[64];

sprintf(fileName, "intro-%d.jpeg", ++counter);

image->saveToJpeg(fileName);

}

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setOnProgressiveImageUpdated(onImageUpdated);

renderer.load("intro.vrscene");

renderer.startSync();

renderer.waitForRenderEnd();

return 0;

}

using (VRayRenderer renderer = new VRayRenderer())

{

int counter = 0;

renderer.ProgressiveImageUpdated += new EventHandler<VRayImageEventArgs>((source, e) =>

{

string fileName = string.Format("intro-{0}.jpeg", ++counter);

e.Image.SaveToJPEG(fileName);

e.Image.Dispose();

});

renderer.Load("intro.vrscene");

renderer.Start();

renderer.WaitForRenderEnd();

}

var renderer = vray.VRayRenderer();

var counter = 0;

renderer.on('progressiveImageUpdated', function(image, index, passType, instant) {

var fileName = 'intro-' + (++counter) + '.jpeg';

image.save(fileName, function() {

image.close();

});

});

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(function() {

renderer.close();

});

});

});

IX. V-Ray Images

The VRayImage class provides access to the images rendered by V-Ray. It is used by the VRayRenderer, as well as by some of the arguments of the callbacks invoked when an event occurs. The VRayImage class provides utility functions for manipulating the retrieved binary image data. The binary data is in full 32-bit float per channel format and can be accessed directly, but there are convenience methods that perform compression in several of the most popular 8-bit formats - BMP, JPEG, PNG. Saving EXR and other high dynamic range formats is also possible through another API: VRayRenderer.vfb.saveImage().

The methods that are exposed by the VRayImage class come in two flavors. The first group of methods directly returns the bytes of the compressed image, while the second group compresses the image and saves it to a file.

with vray.VRayRenderer() as renderer:

renderer.load('intro.vrscene')

renderer.start()

renderer.waitForRenderEnd(6000)

image = renderer.getImage()

data = image.compress(type='png')

with open('intro_compressTo.png', 'wb') as outStream:

outStream.write(data)

image.save("intro_saveTo.png")

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.load("intro.vrscene");

renderer.startSync();

renderer.waitForRenderEnd(6000);

VRayImage* image = renderer.getImage();

size_t imageSize;

Png* png = image->compressToPng(imageSize);

ofstream outputStream("intro_compressTo.png", ofstream::binary);

outputStream.write((char*)png->getData(), imageSize);

outputStream.close();

delete png;

bool res = image->saveToPng("intro_saveTo.png");

delete image;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("intro.vrscene");

renderer.Start();

renderer.WaitForRenderEnd(6000);

using (VRayImage image = renderer.GetImage())

{

byte[] data = image.CompressToPNG();

using (FileStream outStream = new FileStream("intro_compressTo.png", FileMode.Create, FileAccess.Write))

{

outStream.Write(data, 0, data.Length);

}

image.SaveToPNG("intro_saveTo.png");

}

}

var fs = require('fs');

var renderer = vray.VRayRenderer();

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function () {

var image = renderer.getImage();

image.compress('png', function (err, buffer) {

if (err) throw err;

fs.writeFile('intro_compressTo.png', buffer, function () {

image.save('intro_saveTo.png', function () {

renderer.close();

});

});

});

});

});

});

All instances of the VRayImage class must be closed so that memory resources held by the instance are released. It is recommended to always free the resources (close the image) when you have finished working with the image. The platforms that support a garbage collection mechanism will take care of freeing the internally held resources in the event that the user does not close the retrieved image.

Downscaling

In addition to the utility methods for compression, the VRayImage class supports downscale operations that resize the retrieved image to a smaller one. The result of the downscale operations is another VRayImage instance which has all the utility methods of the class.

with vray.VRayRenderer() as renderer:

renderer.load('intro.vrscene')

renderer.start()

renderer.waitForRenderEnd(6000)

image = renderer.getImage()

downscaled = image.getDownscaled(260, 180)

downscaled.save('intro.png')

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.load("intro.vrscene");

renderer.start();

renderer.waitForRenderEnd(6000);

// LocalVRayImage is a variation of VRayImage to be created on the stack, so that the image gets auto-deleted

LocalVRayImage image = renderer.getImage();

LocalVRayImage downscaled = image->getDownscaled(260, 180);

downscaled->saveToPng("intro.png");

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("intro.vrscene");

renderer.Start();

renderer.WaitForRenderEnd(6000);

using (VRayImage image = renderer.GetImage())

{

using (VRayImage downscaled = image.GetDownscaled(260, 180))

{

downscaled.SaveToPNG("intro.png");

}

}

}

var renderer = vray.VRayRenderer();

renderer.load('intro.vrscene', function (err) {

if (err) throw err;

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function () {

var image = renderer.getImage();

image.getDownscaled(260, 180, function(downscaled) {

downscaled.save('intro.png', function() {

downscaled.close(); // Not mandatory, can be left to the garbage collector

image.close(); // Not mandatory, can be left to the garbage collector

renderer.close();

});

});

});

});

});

Changing the Image Size

The size of the rendered image can be controlled through the size property of the VRayRenderer class. When a ".vrscene" file is loaded the size defined in this scene file is used unless the VRayRenderer size is set after loading the file to override it.

with vray.VRayRenderer() as renderer:

renderer.load('intro.vrscene')

renderer.size = (640, 360)

renderer.start()

renderer.waitForRenderEnd(6000)

image = renderer.getImage()

image.save('intro.png')

VRayInit init(NULL, true);

VRayRenderer renderer();

renderer.load("intro.vrscene");

renderer.setImageSize(640, 360);

renderer.start();

renderer.waitForRenderEnd(6000);

LocalVRayImage image = renderer.getImage();

image->saveToPng("intro.png");

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("intro.vrscene");

renderer.SetImageSize(640, 360);

renderer.Start();

renderer.WaitForRenderEnd(6000);

using (VRayImage image = renderer.GetImage())

{

image.SaveToPNG("intro.png");

}

}

var renderer = vray.VRayRenderer();

renderer.load('intro.vrscene', function(err) {

if (err) throw err;

renderer.size = {width: 640, height: 360};

renderer.start(function(err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function() {

var image = renderer.getImage();

image.save('intro.png', function() {

image.close();

renderer.close();

});

});

});

});

X. Render Elements

V-Ray Render Elements (also known as Render Channels or Arbitrary Output Variables, AOVs) are images containing various types of render data encoded as 3-element color, single floats or integers. Some of them are Z-depth, surface normal, UV coordinates, velocity, lighting, reflections etc. Each V-Ray scene may contain an arbitrary number of render elements (also called channels). Each channel is enabled by a unique plugin, except for the RGB and Alpha channels, which are always enabled.

To access the render elements in the current scene, use the VRayRenderer instance where the scene is loaded. Each render element's data can be taken either as a VRayImage, or as raw data (as byte, integer, or float buffers). Optionally, provide a sub-region of interest to the APIs to get that part of the data.

If the scene does not contain render element plugins, they could be added, as shown in the examples.

# Compatibility with Python 2.7.

from __future__ import print_function

# The directory containing the vray shared object should be present in the PYTHONPATH environment variable.

# Try to import the vray module from VRAY_SDK/python, if it is not in PYTHONPATH

import sys, os

VRAY_SDK = os.environ.get('VRAY_SDK')

if VRAY_SDK:

sys.path.append(os.path.join(VRAY_SDK, 'python'))

import vray

SCENE_PATH = os.path.join(os.environ.get('VRAY_SDK'), 'scenes')

# Change process working directory to SCENE_PATH in order to be able to load relative scene resources.

os.chdir(SCENE_PATH)

def addExampleRenderElements(renderer):

reManager = renderer.renderElements

# In Python and JS we use strings:

print('All render element (channel) identifiers:')

print(reManager.getAvailableTypes())

# The RGB channel is always present. It is often referred to as "Beauty" by compositors

# Alpha is also always included

# --- BEAUTY ELEMENTS ---

# "Beauty" elements are the components that make up the main RGB channel,

# such as direct and indirect lighting, specular and diffuse contributions and so on.

# Light bounced from diffuse materials (layers)

reManager.add('diffuse')

# Light from glossy reflections

reManager.add('reflection')

# Refracted light

reManager.add('refraction')

# Light from perfect mirror reflections

reManager.add('specular')

# Subsurface scattered light

reManager.add('sss')

# Light from self-illuimnating materials

reManager.add('self_illumination')

# Global illumination, indirect lighting

reManager.add('gi')

# Direct lighting

reManager.add('lighting')

# Sum of all lighting

reManager.add('total_light')

# Shadows, combined with diffuse. Shadowed areas are brighter

reManager.add('shadow')

# Reflected light if surfaces were fully reflective

reManager.add('raw_reflection')

# Refracted light if surfaces were fully refractive

reManager.add('raw_refraction')

# Attenuation factor for reflections. Refl = ReflFilter * RawRefl

reManager.add('reflection_filter')

# Attenuation factor for refractions. Refr = RefrFilter * RawRefr

reManager.add('refraction_filter')

# Intensity of GI before multiplication with the diffuse filter

reManager.add('raw_gi')

# Direct lighting without diffuse color

reManager.add('raw_light')

# Sum light without diffuse color

reManager.add('raw_total_light')

# Shadows without the diffuse factor

reManager.add('raw_shadow')

# Grayscale material glossiness values

reManager.add('reflection_glossiness')

# Glossiness for highlights only

reManager.add('reflection_hilight_glossiness')

# --- MATTE ELEMENTS ---

# Matte elements are used for masking out parts of the frame when compositing.

# Color is based on material ID, see MtlMaterialID plugin

reManager.add('material_id')

# Color is based on the objectID of each Node

reManager.add('node_id')

# --- GEOMETRIC ELEMENTS ---

# Geometric data such as normals and depth has various applications

# in compositing and post-processing.

# The pure geometric normals, encoded as R=X, G=Y, B=Z

reManager.add('normals')

# Normals after bump mapping

reManager.add('bump_normals')

# Normalized grayscale depth buffer

reManager.add('z_depth')

# The per-frame velocity of moving objects

reManager.add('velocity')

# Create an instance of VRayRenderer for production render mode. The renderer is automatically closed after the `with` block.

with vray.VRayRenderer() as renderer:

renderer.renderMode = 'production'

# Load scene from a file.

renderer.load(os.path.join(SCENE_PATH, 'cornell_new.vrscene'))

# This will add the necessary channel plugins to the scene

addExampleRenderElements(renderer)

renderer.startSync()

renderer.waitForRenderEnd()

# This will save all the channels with suffixed file names

print('>>> Saving all channels')

renderer.vfb.saveImage('cornell_RE.png')

diffuseRE = renderer.renderElements.get('diffuse')

# If the diffuse render element is missing it will evaluate to false

if diffuseRE:

diffusePlugin = diffuseRE.plugin

print('>>> Saving inverted JPG from plugin {0} {1}'.format(diffusePlugin.getType(), diffusePlugin.getName()))

image = diffuseRE.getImage()

if image:

image.makeNegative()

image.save('cornell_inv_diffuse_VRayImage.jpg')

# The renderer object is unusable after closing since its resources are freed.

# It should be released for garbage collection.

#include "vraysdk.hpp"

#include "vrayplugins.hpp"

using namespace VRay;

using namespace VRay::Plugins;

using namespace std;

const char *BASE_PATH = getenv("VRAY_SDK");

string SCENE_PATH = (BASE_PATH ? string(BASE_PATH) : string(".")) + PATH_DELIMITER + "scenes";

void addExampleRenderElements(VRayRenderer &renderer) {

RenderElements reManager = renderer.getRenderElements();

// The RGB channel is always present. It is often referred to as "Beauty" by compositors

// Alpha is also always included

// Beauty elements

// Light bounced from diffuse materials (layers)

reManager.add(RenderElement::DIFFUSE, NULL, NULL);

// Light from glossy reflections

reManager.add(RenderElement::REFLECT, NULL, NULL);

// Refracted light

reManager.add(RenderElement::REFRACT, NULL, NULL);

// Light from perfect mirror reflections

reManager.add(RenderElement::SPECULAR, NULL, NULL);

// Subsurface scattered light

reManager.add(RenderElement::SSS, NULL, NULL);

// Light from self-illuimnating materials

reManager.add(RenderElement::SELFILLUM, NULL, NULL);

// Global illumination, indirect lighting

reManager.add(RenderElement::GI, NULL, NULL);

// Direct lighting

reManager.add(RenderElement::LIGHTING, NULL, NULL);

// Sum of all lighting

reManager.add(RenderElement::TOTALLIGHT, NULL, NULL);

// Shadows, combined with diffuse. Shadowed areas are brighter

reManager.add(RenderElement::SHADOW, NULL, NULL);

// Reflected light if surfaces were fully reflective

reManager.add(RenderElement::RAW_REFLECTION, NULL, NULL);

// Refracted light if surfaces were fully refractive

reManager.add(RenderElement::RAW_REFRACTION, NULL, NULL);

// Attenuation factor for reflections. Refl = ReflFilter * RawRefl

reManager.add(RenderElement::REFLECTION_FILTER, NULL, NULL);

// Attenuation factor for refractions. Refr = RefrFilter * RawRefr

reManager.add(RenderElement::REFRACTION_FILTER, NULL, NULL);

// Intensity of GI before multiplication with the diffuse filter

reManager.add(RenderElement::RAWGI, NULL, NULL);

// Direct lighting without diffuse color

reManager.add(RenderElement::RAWLIGHT, NULL, NULL);

// Sum light without diffuse color

reManager.add(RenderElement::RAWTOTALLIGHT, NULL, NULL);

// Shadows without the diffuse factor

reManager.add(RenderElement::RAWSHADOW, NULL, NULL);

// Grayscale material glossiness values

reManager.add(RenderElement::VRMTL_REFLECTGLOSS, NULL, NULL);

// Glossiness for highlights only

reManager.add(RenderElement::VRMTL_REFLECTHIGLOSS, NULL, NULL);

// Matte elements

// Color is based on material ID, see MtlMaterialID plugin

reManager.add(RenderElement::MTLID, NULL, NULL);

// Color is based on the objectID of each Node

reManager.add(RenderElement::NODEID, NULL, NULL);

// Geometric elements

// The pure geometric normals, encoded as R=X, G=Y, B=Z

reManager.add(RenderElement::NORMALS, NULL, NULL);

// Normals after bump mapping

reManager.add(RenderElement::BUMPNORMALS, NULL, NULL);

// Normalized grayscale depth buffer

reManager.add(RenderElement::ZDEPTH, NULL, NULL);

// The per-frame velocity of moving objects

reManager.add(RenderElement::VELOCITY, NULL, NULL);

}

int main() {

// Change process working directory to SCENE_PATH in order to be able to load relative scene resources.

changeCurrentDir(SCENE_PATH.c_str());

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setRenderMode(VRayRenderer::RENDER_MODE_PRODUCTION);

// Load scene from a file.

renderer.load("cornell_new.vrscene");

// This will add the necessary channel plugins to the scene

addExampleRenderElements(renderer);

renderer.startSync();

renderer.waitForRenderEnd();

// This will save all the channels with suffixed file names

printf(">>> Saving all channels\n");

renderer.vfb.saveImage("cornell_RE.png");

RenderElement diffuseRE = renderer.getRenderElements().get(RenderElement::DIFFUSE);

// If the diffuse render element is missing it will evaluate to false

if (diffuseRE) {

Plugin diffusePlugin = diffuseRE.getPlugin();

printf(">>> Saving inverted JPG from plugin %s %s\n", diffusePlugin.getType(), diffusePlugin.getName());

VRayImage *image = diffuseRE.getImage();

if (image) {

image->makeNegative();

image->saveToJpeg("cornell_inv_diffuse_VRayImage.jpg");

delete image;

}

}

return 0;

}

using System;

using System.IO;

using VRay;

using VRay.Plugins;

namespace _render_elements

{

class Program

{

private static void addExampleRenderElements(VRayRenderer renderer)

{

RenderElements reManager = renderer.RenderElements;

/// --- "BEAUTY" ELEMENTS --- ///

/// "Beauty" elements are the components that make up the main RGB channel,

/// such as direct and indirect lighting, specular and diffuse contributions and so on.

// The RGB channel is always present. It is often referred to as "Beauty" by compositors

// Alpha is also always included

// Light bounced from diffuse materials (layers)

reManager.Add(RenderElementType.DIFFUSE, "", "");

// Light from glossy reflections

reManager.Add(RenderElementType.REFLECT, "", "");

// Refracted light

reManager.Add(RenderElementType.REFRACT, "", "");

// Light from perfect mirror reflections

reManager.Add(RenderElementType.SPECULAR, "", "");

// Subsurface scattered light

reManager.Add(RenderElementType.SSS, "", "");

// Light from self-illuimnating materials

reManager.Add(RenderElementType.SELFILLUM, "", "");

// Global illumination, indirect lighting

reManager.Add(RenderElementType.GI, "", "");

// Direct lighting

reManager.Add(RenderElementType.LIGHTING, "", "");

// Sum of all lighting

reManager.Add(RenderElementType.TOTALLIGHT, "", "");

// Shadows, combined with diffuse. Shadowed areas are brighter

reManager.Add(RenderElementType.SHADOW, "", "");

// Reflected light if surfaces were fully reflective

reManager.Add(RenderElementType.RAW_REFLECTION, "", "");

// Refracted light if surfaces were fully refractive

reManager.Add(RenderElementType.RAW_REFRACTION, "", "");

// Attenuation factor for reflections. Refl = ReflFilter * RawRefl

reManager.Add(RenderElementType.REFLECTION_FILTER, "", "");

// Attenuation factor for refractions. Refr = RefrFilter * RawRefr

reManager.Add(RenderElementType.REFRACTION_FILTER, "", "");

// Intensity of GI before multiplication with the diffuse filter

reManager.Add(RenderElementType.RAWGI, "", "");

// Direct lighting without diffuse color

reManager.Add(RenderElementType.RAWLIGHT, "", "");

// Sum light without diffuse color

reManager.Add(RenderElementType.RAWTOTALLIGHT, "", "");

// Shadows without the diffuse factor

reManager.Add(RenderElementType.RAWSHADOW, "", "");

// Grayscale material glossiness values

reManager.Add(RenderElementType.VRMTL_REFLECTGLOSS, "", "");

// Glossiness for highlights only

reManager.Add(RenderElementType.VRMTL_REFLECTHIGLOSS, "", "");

/// --- MATTE ELEMENTS --- ///

/// Matte elements are used for masking out parts of the frame when compositing.

// Color is based on material ID, see MtlMaterialID plugin

reManager.Add(RenderElementType.MTLID, "", "");

// Color is based on the objectID of each Node

reManager.Add(RenderElementType.NODEID, "", "");

/// --- GEOMETRIC ELEMENTS --- ///

/// Geometric data such as normals and depth has various applications in compositing and post-processing.

// The pure geometric normals, encoded as R=X, G=Y, B=Z

reManager.Add(RenderElementType.NORMALS, "", "");

// Normals after bump mapping

reManager.Add(RenderElementType.BUMPNORMALS, "", "");

// Normalized grayscale depth buffer

reManager.Add(RenderElementType.ZDEPTH, "", "");

// The per-frame velocity of moving objects

reManager.Add(RenderElementType.VELOCITY, "", "");

}

static void Main(string[] args)

{

string SCENE_PATH = Path.Combine(Environment.GetEnvironmentVariable("VRAY_SDK"), "scenes");

// Change process working directory to SCENE_PATH in order to be able to load relative scene resources.

Directory.SetCurrentDirectory(SCENE_PATH);

// Create an instance of VRayRenderer with default options. The renderer is automatically closed after the `using` block.

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.RenderMode = RenderMode.PRODUCTION;

// Load scene from a file.

renderer.Load("cornell_new.vrscene");

// This will add the necessary channel plugins to the scene

addExampleRenderElements(renderer);

renderer.StartSync();

renderer.WaitForRenderEnd();

// This will save all the channels with suffixed file names

Console.WriteLine(">>> Saving all channels");

renderer.Vfb.SaveImage("cornell_RE.png");

RenderElement diffuseRE = renderer.RenderElements.Get(RenderElementType.DIFFUSE);

Plugin diffusePlugin = diffuseRE.Plugin;

Console.WriteLine(String.Format(">>> Saving inverted JPG from plugin {0} {1}\n", diffusePlugin.Gettype(), diffusePlugin.GetName()));

VRayImage image = diffuseRE.GetImage();

image.MakeNegative();

image.SaveToJPEG("cornell_inv_diffuse_VRayImage.jpg");

}

// The renderer object is unusable after closing since its resources are freed.

// It should be released for garbage collection.

}

}

}

var path = require('path');

var vray = require(path.join(process.env.VRAY_SDK, 'node', 'vray'));

var SCENE_PATH = path.join(process.env.VRAY_SDK, 'scenes');

// Change process working directory to SCENE_PATH in order to be able to load relative scene resources.

process.chdir(SCENE_PATH);

var addExampleRenderElements = function(renderer) {

var reManager = renderer.renderElements;

// In Python and JS we use strings:

console.log('All render element (channel) identifiers:');

console.log(reManager.getAvailableTypes());

// The RGB channel is always present. It is often referred to as "Beauty" by compositors

// Alpha is also always included

// --- BEAUTY ELEMENTS ---

// "Beauty" elements are the components that make up the main RGB channel,

// such as direct and indirect lighting, specular and diffuse contributions and so on.

// Light bounced from diffuse materials (layers);

reManager.add('diffuse');

// Light from glossy reflections

reManager.add('reflection');

// Refracted light

reManager.add('refraction');

// Light from perfect mirror reflections

reManager.add('specular');

// Subsurface scattered light

reManager.add('sss');

// Light from self-illuimnating materials

reManager.add('self_illumination');

// Global illumination, indirect lighting

reManager.add('gi');

// Direct lighting

reManager.add('lighting');

// Sum of all lighting

reManager.add('total_light');

// Shadows, combined with diffuse. Shadowed areas are brighter

reManager.add('shadow');

// Reflected light if surfaces were fully reflective

reManager.add('raw_reflection');

// Refracted light if surfaces were fully refractive

reManager.add('raw_refraction');

// Attenuation factor for reflections. Refl = ReflFilter * RawRefl

reManager.add('reflection_filter');

// Attenuation factor for refractions. Refr = RefrFilter * RawRefr

reManager.add('refraction_filter');

// Intensity of GI before multiplication with the diffuse filter

reManager.add('raw_gi');

// Direct lighting without diffuse color

reManager.add('raw_light');

// Sum light without diffuse color

reManager.add('raw_total_light');

// Shadows without the diffuse factor

reManager.add('raw_shadow');

// Grayscale material glossiness values

reManager.add('reflection_glossiness');

// Glossiness for highlights only

reManager.add('reflection_hilight_glossiness');

// --- MATTE ELEMENTS ---

// Matte elements are used for masking out parts of the frame when compositing.

// Color is based on material ID, see MtlMaterialID plugin

reManager.add('material_id');

// Color is based on the objectID of each Node

reManager.add('node_id');

// --- GEOMETRIC ELEMENTS ---

// Geometric data such as normals and depth has various applications

// in compositing and post-processing.

// The pure geometric normals, encoded as R=X, G=Y, B=Z

reManager.add('normals');

// Normals after bump mapping

reManager.add('bump_normals');

// Normalized grayscale depth buffer

reManager.add('z_depth');

// The per-frame velocity of moving objects

reManager.add('velocity');

}

// Create an instance of VRayRenderer for production render mode.

var renderer = vray.VRayRenderer();

renderer.renderMode = 'production';

// Load scene from a file asynchronously.

renderer.load('cornell_new.vrscene', function(err) {

if (err) throw err;

// This will add the necessary channel plugins to the scene

addExampleRenderElements(renderer);

renderer.startSync();

renderer.waitForRenderEnd(function() {

// This will save all the channels with suffixed file names

console.log('>>> Saving all channels');

renderer.vfb.saveImageSync('cornell_RE.png');

var diffuseRE = renderer.renderElements.get('diffuse');

// If the diffuse render element is missing it will evaluate to false

if (diffuseRE) {

var diffusePlugin = diffuseRE.plugin;

console.log('>>> Saving inverted JPG from plugin ' + diffusePlugin.getType() + ' ' + diffusePlugin.getName());

image = diffuseRE.getImage();

if (image) {

image.makeNegative();

image.save('cornell_inv_diffuse_VRayImage.jpg');

}

}

// Closes the renderer.

// This call is mandatory in order to free up the event loop.

// The renderer object is unusable after closing since its resources

// are freed. It should be released for garbage collection.

renderer.close();

});

});

XI. Plugins

Plugins are the objects that specify the lights, geometry, materials or settings that constitute the 3D scene. Each V-Ray scene consists of a set of plugins instances. The V-Ray Application SDK exposes methods in the main VRayRenderer class that can be used to create plugin instances or list and modify (or delete) the existing ones in the scene.

Plugin objects can be retrieved by instance name. Once the user has obtained a plugin instance its property (a.k.a. parameter) values can be viewed or set to affect the rendered image. The properties of the plugin are accessed by name. In Interactive mode the changes to plugin property values are usually applied immediately during rendering and changes become visible almost instantly, but for each change the image sampling is reset and you get some noisy images initially. In Production mode changes to plugin property values take effect if they are applied before the rendering process is started. The following example demonstrates how the transform for the render view (camera) in a scene can be changed with the App SDK.

with vray.VRayRenderer() as renderer:

renderer.load('cornell_new.vrscene')

# find the RenderView plugin in the scene

renderView = renderer.plugins.renderView

# change the transform value

newTransform = renderView.transform

newTransform = newTransform.replaceOffset(newTransform.offset + vray.Vector(-170, 120, newTransform.offset.z))

renderView.transform = newTransform

renderer.startSync()

renderer.waitForRenderEnd(6000)

#include "vraysdk.hpp"

// The vrayplugins header provides specialized classes for concrete types of plugins, deriving from the generic Plugin base class.

// It is optional and the generic Plugin API can be used instead.

#include "vrayplugins.hpp"

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.load("cornell_new.vrscene");

// find the RenderView plugin in the scene

RenderView renderView = renderer.getPlugin<RenderView>("renderView");

// change the transform value

Transform newTransform = renderView.getTransform("transform");

newTransform.offset += Vector(-170, 120, newTransform.offset.z);

renderView.set_transform(newTransform);

renderer.startSync();

renderer.waitForRenderEnd(6000);

return 0;

}

using VRay;

using VRay.Plugins;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("cornell_new.vrscene");

// find the RenderView plugin in the scene

RenderView renderView = renderer.GetPlugin<RenderView>("renderView");

// change the transform value

Transform newTransform = renderView.Transform;

renderView.Transform = newTransform.ReplaceOffset(newTransform.Offset + new Vector(-170, 120, newTransform.Offset.Z));

renderer.StartSync();

renderer.WaitForRenderEnd(6000);

}

var renderer = vray.VRayRenderer();

renderer.load('cornell_new.vrscene', function(err) {

if (err) throw err;

// find the RenderView plugin in the scene

var renderView = renderer.plugins.renderView;

// change the transform value

var newTransform = renderView.transform;

newTransform = newTransform.replaceOffset(newTransform.offset.add(vray.Vector(-170, 120, newTransform.offset.z)));

renderView.transform = newTransform;

// Start rendering.

renderer.start(function (err) {

if (err) throw err;

renderer.waitForRenderEnd(6000, function() {

renderer.close();

});

});

});

The call to a method that retrieves the value for a plugin property always returns a copy of the internal value stored in the local V-Ray engine. In order to change the plugin property value so that it affects the rendered image the setter method from the plugin class should be called. Simply modifying the value returned by a getter will not lead to changes in the scene as it is a copy, not a reference.

Adding and removing plugins

Plugin instances can be created and removed dynamically with the App SDK. The next example demonstrates how a new light can be added to the scene.

with vray.VRayRenderer() as renderer:

renderer.load('cornell_new.vrscene')

# create a new light plugin

lightOmni = renderer.classes.LightOmni('lightOmniBlue')

lightOmni.color = vray.AColor(0, 0, 1)

lightOmni.intensity = 60000.0

lightOmni.decay = 2.0

lightOmni.shadowRadius = 40.0

lightOmni.transform = vray.Transform(

vray.Matrix(vray.Vector(1.0, 0.0, 0.0), vray.Vector(0.0, 0.0, 1.0), vray.Vector(0.0, -1.0, 0.0)),

vray.Vector(-50, 50, 50))

renderer.startSync()

renderer.waitForRenderEnd(6000)

#include "vraysdk.hpp"

#include "vrayplugins.hpp"

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.load("cornell_new.vrscene");

// create a new light plugin

LightOmni lightOmni = renderer.newPlugin<LightOmni>("lightOmniBlue");

lightOmni.set_color(AColor(0.f, 0.f, 1.f));

lightOmni.set_intensity(60000.f);

lightOmni.set_decay(2.0f);

lightOmni.set_shadowRadius(40.0f);

lightOmni.set_transform(Transform(

Matrix(Vector(1.0, 0.0, 0.0), Vector(0.0, 0.0, 1.0), Vector(0.0, -1.0, 0.0)),

Vector(-50, 50, 50)));

renderer.startSync();

renderer.waitForRenderEnd(6000);

return 0;

}

using VRay;

using VRay.Plugins;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("cornell_new.vrscene");

// create a new light plugin

LightOmni lightOmni = renderer.NewPlugin<LightOmni>("lightOmniBlue");

lightOmni.Color = new AColor(0, 0, 1);

lightOmni.Intensity = 60000.0f;

lightOmni.Decay = 2.0f;

lightOmni.ShadowRadius = 40.0f;

lightOmni.Transform = new Transform(

new Matrix(new Vector(1.0, 0.0, 0.0), new Vector(0.0, 0.0, 1.0), new Vector(0.0, -1.0, 0.0)),

new Vector(-50, 50, 50);

renderer.StartSync();

renderer.WaitForRenderEnd(6000);

}

var renderer = vray.VRayRenderer();

renderer.load('cornell_new.vrscene', function(err) {

if (err) throw err;

// create a new light plugin

var lightOmni = renderer.classes.LightOmni('lightOmniBlue');

lightOmni.color = vray.AColor(0, 0, 1);

lightOmni.intensity = 60000.0;

lightOmni.decay = 2.0;

lightOmni.shadowRadius = 40.0;

lightOmni.transform = vray.Transform(

vray.Matrix(vray.Vector(1.0, 0.0, 0.0), vray.Vector(0.0, 0.0, 1.0), vray.Vector(0.0, -1.0, 0.0)),

vray.Vector(-50, 50, 50));

renderer.startSync();

renderer.waitForRenderEnd(6000, function() {

renderer.close();

});

});

with vray.VRayRenderer() as renderer:

renderer.load('cornell_new.vrscene')

del renderer.plugins['Sphere0Shape4@node']

renderer.startSync()

renderer.waitForRenderEnd(6000)

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.load("cornell_new.vrscene");

Plugin sphere = renderer.getPlugin("Sphere0Shape4@node");

renderer.deletePlugin(sphere);

renderer.startSync();

renderer.waitForRenderEnd(6000);

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.Load("cornell_new.vrscene");

Plugin sphere = renderer.GetPlugin("Sphere0Shape4@node");

renderer.DeletePlugin(sphere);

renderer.StartSync();

renderer.WaitForRenderEnd(6000);

}

var renderer = vray.VRayRenderer();

renderer.load('./cornell_new.vrscene', function(err) {

if (err) throw err;

delete renderer.plugins['Sphere0Shape4@node'];

renderer.startSync();

renderer.waitForRenderEnd(6000, function() {

renderer.close();

});

});

Automatic commit of property changes

with vray.VRayRenderer() as renderer:

renderer.autoCommit = False

renderer.load('cornell_new.vrscene')

# This change won't be applied immediately

lightOmni = renderer.classes.LightOmni()

lightOmni.color = vray.AColor(0, 0, 1)

lightOmni.intensity = 60000.0

lightOmni.decay = 2.0

lightOmni.shadowRadius = 40.0

lightOmni.transform = vray.Transform(vray.Matrix(vray.Vector(1.0, 0.0, 0.0),

vray.Vector(0.0, 0.0, 1.0), vray.Vector(0.0, -1.0, 0.0)), vray.Vector(-50, 50, 50))

renderer.startSync()

renderer.waitForRenderEnd(2000)

# Make a group of changes 2 seconds after the render starts

renderer.plugins.renderView.fov = 1.5

del renderer.plugins['Sphere0Shape4@node']

# Commit applies all 3 changes

renderer.commit()

renderer.waitForRenderEnd(4000)

#include "vraysdk.hpp"

#include "vrayplugins.hpp"

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

renderer.setAutoCommit(false);

renderer.load("cornell_new.vrscene");

// This change won't be applied immediately

LightOmni lightOmni = renderer.newPlugin<LightOmni>();

lightOmni.set_color(Color(0.f, 0.f, 1.f));

lightOmni.set_intensity(60000.0f);

lightOmni.set_decay(2.0f);

lightOmni.set_shadowRadius(40.0f);

lightOmni.set_transform(Transform(Matrix(Vector(1.0, 0.0, 0.0), Vector(0.0, 0.0, 1.0), Vector(0.0, -1.0, 0.0)), Vector(-50, 50, 50)));

renderer.startSync();

renderer.waitForRenderEnd(2000);

// Make a group of changes 2 seconds after the render starts

Plugin sphere = renderer.getPlugin("Sphere0Shape4@node");

renderer.deletePlugin(sphere);

RenderView renderView = renderer.getPlugin<RenderView>("renderView");

renderView.set_fov(1.5f);

// Commit applies all 3 changes

renderer.commit();

renderer.waitForRenderEnd(4000);

return 0;

}

using VRay;

using VRay.Plugins;

using (VRayRenderer renderer = new VRayRenderer())

{

renderer.AutoCommit = false;

renderer.Load("cornell_new.vrscene");

// This change won't be applied immediately

LightOmni lightOmni = renderer.NewPlugin<LightOmni>();

lightOmni.Color = new Color(0, 0, 1);

lightOmni.Intensity = 60000.0f;

lightOmni.Decay = 2.0f;

lightOmni.ShadowRadius = 40.0f;

lightOmni.Transform = new Transform(

new Matrix(new Vector(1.0, 0.0, 0.0), new Vector(0.0, 0.0, 1.0), new Vector(0.0, -1.0, 0.0)),

new Vector(-50, 50, 50)

));

renderer.StartSync();

renderer.WaitForRenderEnd(2000);

// Make a group of changes 2 seconds after the render starts

Plugin sphere = renderer.GetPlugin("Sphere0Shape4@node");

renderer.DeletePlugin(sphere);

RenderView renderView = renderer.GetPlugin<RenderView>("renderView");

renderView.Fov = 1.5f;

// Commit applies all 3 changes

renderer.Commit();

renderer.WaitForRenderEnd(4000);

}

var renderer = vray.VRayRenderer();

renderer.autoCommit = false;

renderer.load('cornell_new.vrscene', function(err) {

if (err) throw err;

// This change won't be applied immediately

var lightOmni = renderer.classes.LightOmni();

lightOmni.color = vray.AColor(0, 0, 1);

lightOmni.intensity = 60000.0;

lightOmni.decay = 2.0;

lightOmni.shadowRadius = 40.0;

lightOmni.transform = vray.Transform(vray.Matrix(vray.Vector(1.0, 0.0, 0.0), vray.Vector(0.0, 0.0, 1.0), vray.Vector(0.0, -1.0, 0.0)), vray.Vector(-50, 50, 50));

renderer.startSync();

// Make a group of changes 2 seconds after the render starts

renderer.waitForRenderEnd(2000, function () {

renderer.plugins.renderView.fov = 1.5;

delete renderer.plugins['Sphere0Shape4@node'];

// Commit applies all 3 changes

renderer.commit();

renderer.waitForRenderEnd(6000, function () {

renderer.close();

});

});

});

XII. Property Types

The following are the types recognized for property types:

- Basic types: int, bool, float, Color (3 float RGB), AColor (4 float ARGB), Vector (3 float), string (UTF-8), Matrix (3 Vectors), Transform (a Matrix and a Vector for translation)

- Objects: references to other plugin instances

- Typed lists: The typed lists in App SDK are IntList, FloatList, ColorList and VectorList.

- Generic heterogenous lists: The App SDK uses a generic type class called Value for items in a generic list. Note that generic lists can be nested.

- Output parameters: These are additional values generated by a given plugin which may be used as input by others. The PluginRef type represents a Plugin with specific output parameter.

Detailed documentation about working with each type can be found in the API reference guides as they have some language nuances. All types are in the VRay namespace.

XIII. Animations

V-Ray App SDK supports rendering of animated scenes. Animated scenes contain animated plugin properties. A plugin property is considered animated if it has a sequence of values - each for a specific time or frame number. This defines key frames and in the process of sampling, interpolation is done between the values.

One can obtain the current frame number from the frame property of the VRayRenderer. The renderer gets into the IDLE_FRAME_DONE intermediate state when a frame is done and there are more frames after it. After the final frame it becomes IDLE_DONE.

def onStateChanged(renderer, oldState, newState, instant):

if oldState == vray.RENDERER_STATE_IDLE_INITIALIZED and newState == vray.RENDERER_STATE_PREPARING:

print('Sequence started.')

if newState == vray.RENDERER_STATE_IDLE_FRAME_DONE or newState == vray.RENDERER_STATE_IDLE_DONE:

print('Image Ready, frame ' + str(renderer.frame) + ' (sequenceEnd = ' + str(renderer.sequenceEnded) + ')')

if newState == vray.RENDERER_STATE_IDLE_FRAME_DONE:

# If the sequence has NOT finished - continue with next frame.

renderer.continueSequence()

else:

print('Sequence done. Closing renderer...')

with vray.VRayRenderer() as renderer:

# Add a listener for the renderer state change event.

# We will use it to detect when a frame is completed and allow the next one to start.

renderer.setOnStateChanged(onStateChanged)

# Load scene from a file.

renderer.load('animation.vrscene')

# Maximum paths per pixel for interactive mode. Set a low sample level to complete rendering faster.

# The default value is unlimited. We have to call this *after* loading the scene.

renderer.setInteractiveSampleLevel(1)

# Start rendering a sequence of frames (animation).

renderer.renderSequence()

# Wait until the entire sequence has finished rendering.

renderer.waitForSequenceEnd()

void onStateChanged(VRayRenderer &renderer, RendererState oldState, RendererState newState, double instant, void* userData) {

if (oldState == IDLE_INITIALIZED && newState == PREPARING) {

printf("Sequence started.\n");

}

if (newState == IDLE_FRAME_DONE || newState == IDLE_DONE) {

printf("Image Ready, frame %d (sequenceEnded = %d)\n", renderer.getCurrentFrame(), renderer.isSequenceEnded());

if (newState == IDLE_FRAME_DONE) {

// If the sequence has NOT finished - continue with next frame.

renderer.continueSequence();

} else {

printf("Sequence done. Closing renderer...\n");

}

}

}

int main() {

VRayInit init(NULL, true);

VRayRenderer renderer;

// Add a listener for the renderer state change event.

// We will use it to detect when a frame is completed and allow the next one to start.

renderer.setOnStateChanged(onStateChanged);

// Load scene from a file.

renderer.load("animation.vrscene");

// Maximum paths per pixel for interactive mode. Set a low sample level to complete rendering faster.

// The default value is unlimited. We have to call this *after* loading the scene.

renderer.setInteractiveSampleLevel(1);

// Start rendering a sequence of frames (animation).

renderer.renderSequence();

// Wait until the entire sequence has finished rendering.

renderer.waitForSequenceEnd();

return 0;

}

using (VRayRenderer renderer = new VRayRenderer())

{

// Add a listener for state changes.

// It is invoked when the renderer prepares for rendering, renders, finishes or fails.

renderer.StateChanged += new EventHandler<StateChangedEventArgs>((source, e) =>

{

if (e.OldState == VRayRenderer.RendererState.IDLE_INITIALIZED

&& e.NewState == VRayRenderer.RendererState.PREPARING)

{

Console.WriteLine("Sequence started.");

}

if (e.NewState == VRayRenderer.RendererState.IDLE_FRAME_DONE)

{

Console.WriteLine("Image Ready, frame " + renderer.Frame + " (sequenceEnded = " + renderer.IsSequenceEnded + ")");

// If the sequence has NOT finished - continue with next frame.

renderer.ContinueSequence();

}

if (e.NewState == VRayRenderer.RendererState.IDLE_DONE)

{

Console.WriteLine("Sequence done. (sequenceEnded = " +

renderer.IsSequenceEnded + ") Closing renderer...");

}

});

// Load scene from a file.

renderer.Load("animation.vrscene");

// Maximum paths per pixel for interactive mode. Set a low sample level to complete rendering faster.

// The default value is unlimited. We have to call this *after* loading the scene.

renderer.SetInteractiveSampleLevel(1);

// Start rendering a sequence of frames (animation).

renderer.RenderSequence();

// Wait until the entire sequence has finished rendering.

renderer.WaitForSequenceEnd();

}

var renderer = vray.VRayRenderer();

// Add a listener for the renderer state change event.

// We will use it to detect when a frame is completed and allow the next one to start.

renderer.on('stateChanged', function(oldState, newState, instant) {

if (newState == 'idleFrameDone' || newState == 'idleDone') {

console.log('Image Ready, frame ' + renderer.frame + ' (sequenceEnd = ' + renderer.sequenceEnded + ')');

// Check if the sequence has finished rendering.

if (newState == 'idleDone') {

// Closes the renderer. This call is mandatory in order to free up the event loop.

// The renderer object is unusable after closing since its resources are freed. It should be released for garbage collection.

renderer.close();

} else {

// If the sequence has NOT finished - continue with next frame.

renderer.continueSequence();

}

} else if (newState.startsWith('idle')) {

console.log('Unexpected end of render sequence: ', newState);

renderer.close();

}

});

// Load scene from a file asynchronously.

renderer.load('animation.vrscene', function(err) {

if (err) throw err;

// Maximum paths per pixel for interactive mode. Set a low sample level to complete rendering faster.

// The default value is unlimited. We have to call this *after* loading the scene.

renderer.setInteractiveSampleLevel(1);

// Start rendering a sequence of frames (animation).

renderer.renderSequence();

// Prevent garbage collection and exiting nodejs until the renderer is closed.

renderer.keepAlive();

});

XIV. V-Ray Server

A V-Ray Server is used as a remote render host during the rendering of a scene. The server is a process that listens for render requests on a specific network port and clients such as instances of the VRayRenderer class can connect to the server to delegate part of the image rendering. V-Ray Standalone render servers can also be used. The server cannot be used on its own to start a rendering process but plays an essential role when completing distributed tasks initiated by clients. In order to take advantage of the distributed rendering capabilities of V-Ray, such servers need to be run and be available for requests.

The V-Ray Application SDK allows for easily creating V-Ray server processes that can be used in distributed rendering. The API includes the VRayServer class that exposes methods for starting and stopping server processes. In addition the VRayServer class enables users to subscribe to V-Ray server specific events so that custom logic can be executed upon their occurrence.

Server events occur when:

- The server process starts and is ready to accept render requests

- A render client connects to the server to request a distributed rendering task

- A render client disconnects from the server

- A server text message is produced due to a change in the server status or to track the progress of the current job

The next code snippet demonstrates how VRayServer can be instantiated and used to start a server process.

from __future__ import print_function

# The directory containing the vray shared object should be present in the PYTHONPATH environment variable.

# Try to import the vray module from VRAY_SDK/python, if it is not in PYTHONPATH

import sys, os

VRAY_SDK = os.environ.get('VRAY_SDK')

if VRAY_SDK:

sys.path.append(os.path.join(VRAY_SDK, 'python'))

import vray

try:

# Compatibility with Python 2.7.

input = raw_input

except NameError:

pass

def printStarted(server, instant):

print('Server started. Press Enter to continue...')

def printRendererConnect(server, host, instant):

print('Host {0} connected to server.'.format(host))

def printRendererDisconnect(server, host, instant):

print('Host {0} disconnected from server.'.format(host))

# Listening port for VRay server. The default value is 20207

options = { 'portNumber': 20207 }

# Create an instance of VRayServer with custom options.

# The server is automatically closed after the `with` block.

with vray.VRayServer(**options) as server:

# Add a listener for server start event. It is invoked when the image has finished rendering.

server.setOnStart(printStarted)

# Add a listener for connect event. It is invoked when a renderer connects to the server.

server.setOnConnect(printRendererConnect)

# Add a listener for disconnect event. It is invoked when a renderer disconnects from the server.

server.setOnDisconnect(printRendererDisconnect)

# Start listening for connections on the specified port.

server.start()

# Press a key to exit.

input('')

#include "vraysrv.hpp"

using namespace VRay;

using namespace std;

void printStarted(VRayServer &server, double instant, void* userData) {

printf("Server started.\n");

}

void printRendererConnect(VRayServer &server, const char* host, double instant, void* userData) {

printf("Host %s connected to server.\n", host);

}

void printRendererDisconnect(VRayServer &server, const char* host, double instant, void* userData) {

printf("Host %s disconnected to server.\n", host);

}